The time I forgot about the speed of light

Posted at 10:00 on 08 November 2021

The Fallacies of Distributed Computing are a set of eight assumptions, originally noted by L Peter Deutsch and others at Sun Microsystems, that are commonly made by programmers and architects who are new to distributed computing and network architecture. They are:

- The network is reliable;

- Latency is zero;

- Bandwidth is infinite;

- The network is secure;

- Topology doesn't change;

- There is one administrator;

- Transport cost is zero;

- The network is homogeneous.

All eight of these assumptions are wrong. In fact, these eight fallacies are the reason why Martin Fowler came up with his First Law of Distributed Object Design: don't distribute your objects.

The first time I fell foul of these fallacies was particularly embarrassing, because it was in a situation where I should have known better.

I was working for a small web agency at the time. A lot of our work was graphic design, webmastering and SEO for local businesses, but we had a few larger clients on our books for whom we had to do some actual coding and server administration. One of them was an airport taxi reservations company who wanted a new front end for their online portal.

Their database was running on SQL Server Express Edition (the free but hopelessly under-powered version), on the same server as the web front end, hosted in a data centre in Germany. Because this was running at pretty much full capacity, they asked us to move it to a larger, beefier server. Since this meant moving up to one of the paid editions, my boss did a bit of shopping around and came to the conclusion that we could save several thousand euros by moving the database to a hosting provider in the USA. Due to the complexity of the code and the fact that it was running on a snowflake server, however, the web front end had to stay put in Germany.

He asked me what I thought about the idea. I may have raised an eyebrow at it, but I didn't say anything. It sounded like a bit of an odd idea, but I didn't see any reason why it shouldn't work.

But I should have. There was one massive, glaring reason -- one that I, in possession of a physics degree, should have spotted straight away.

The speed of light.

The ultimate speed limit of the universe.

It is 299,792,458 metres per second now and it was 299,792,458 metres per second then. It has been 299,792,458 metres per second everywhere in the visible universe for the past 13.8 billion years, and expecting it to change to something bigger in time for our launch date would have been, let's just say, a tad optimistic.

Now that distance may sound like a lot, but it's only 48 times the distance from Frankfurt to New York. It simply isn't physically possible for a web page in Germany to make more than twenty or so consecutive requests a second to a database in America -- and many web pages in data-driven applications, ours included, need a whole lot more requests than that.

Needless to say, when we flipped the switch, the site crashed.

To say this was an embarrassment to me is an understatement. I should have spotted it immediately. I have a university degree in physics, and the physics that I needed to spot this was stuff that I learned in school. What was I thinking?

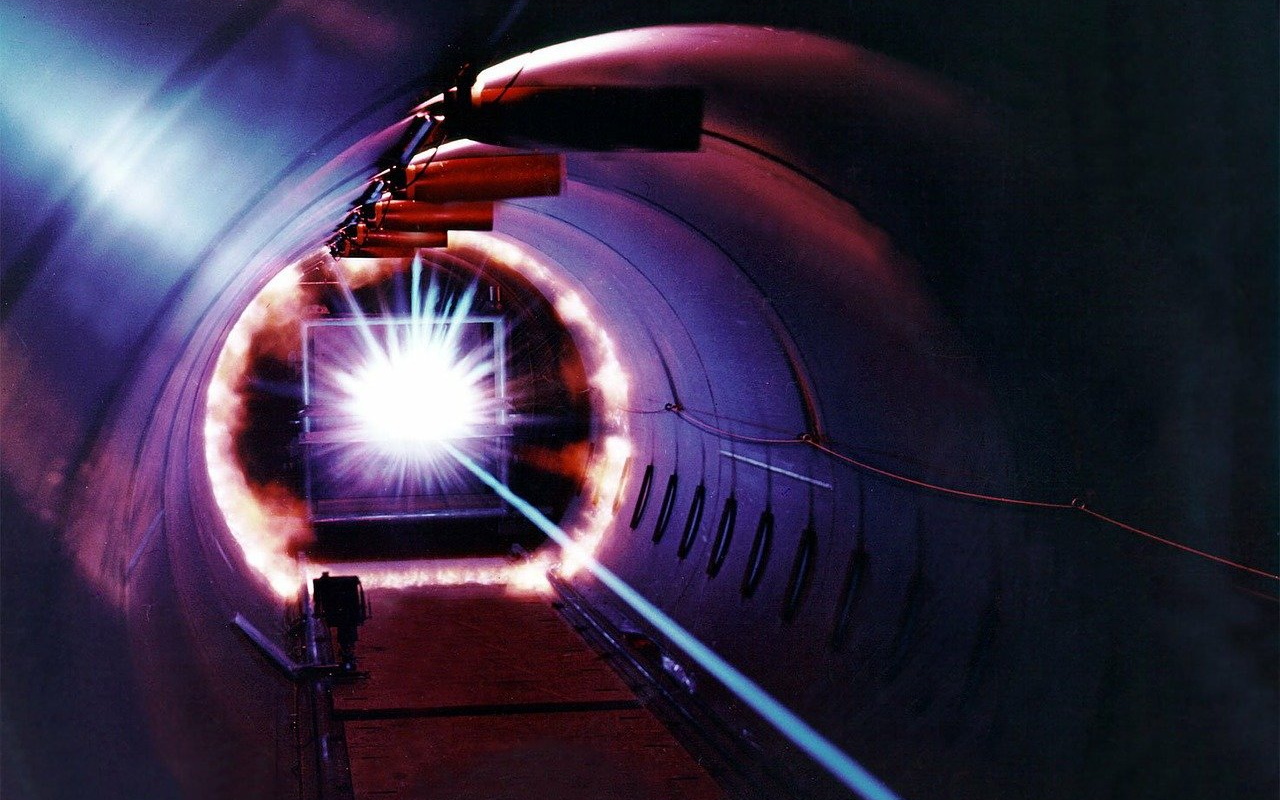

Featured image by WikiImages from Pixabay